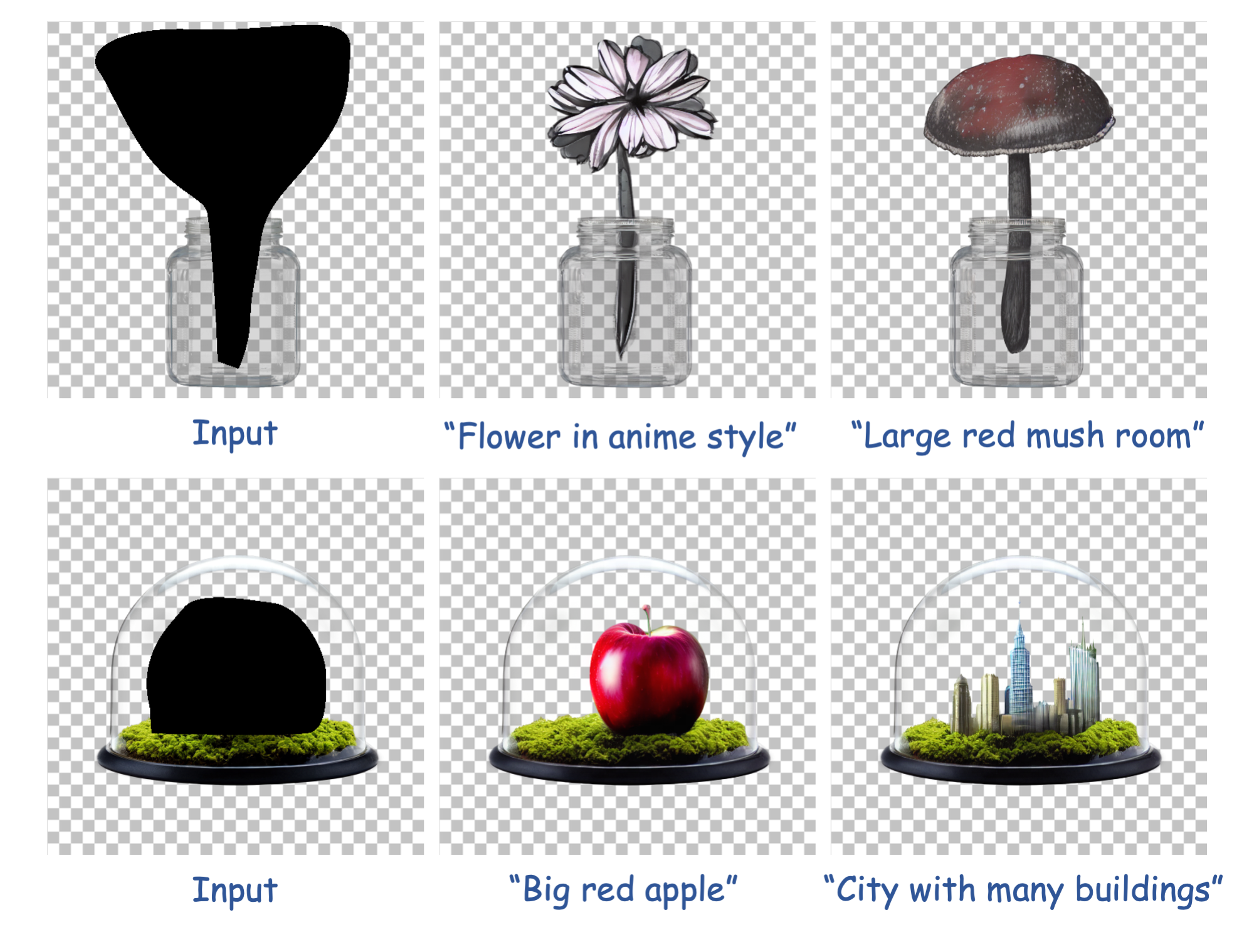

RGBA images, with the additional alpha channel, are crucial for any application that needs blending, masking, or transparency effects, making them more versatile than standard RGB images. Nevertheless, existing image inpainting methods are designed exclusively for RGB images. Conventional approaches to transparent image inpainting typically involve placing a background underneath RGBA images and employing a two-stage process: image inpainting followed by image matting. This pipeline, however, struggles to preserve transparency consistency in edited regions, and matting can introduce jagged edges along transparency boundaries. To address these challenges, we propose Trans-Adapter, a plug-and-play adapter that enables diffusion-based inpainting models to process transparent images directly. Trans-Adapter also supports controllable editing via ControlNet and can be seamlessly integrated into various community models. To evaluate our method, we introduce LayerBench, along with a novel non-reference alpha edge quality evaluation metric for assessing transparency edge quality. We conduct extensive experiments on LayerBench to demonstrate the effectiveness of our approach.

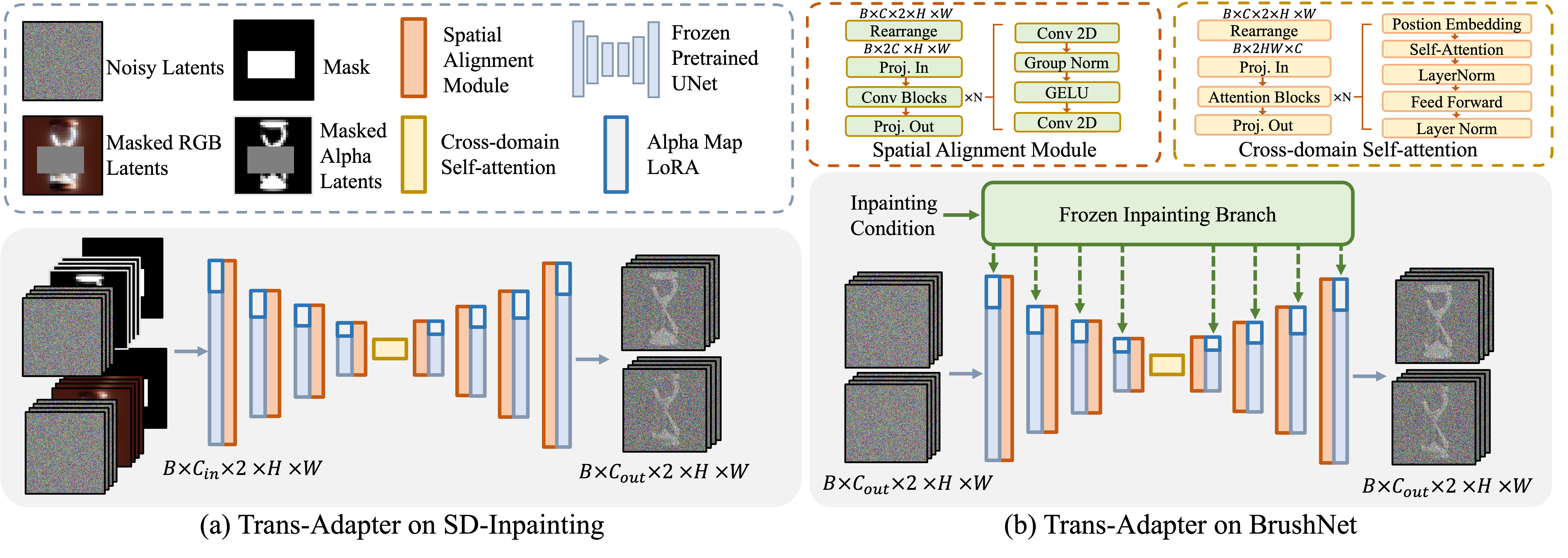

Trans-Adapter can be flexibly integrated into different image inpainting frameworks. We demonstrate two instantiations: (a) SD-Inpainting, which expands the input channels to encode the additional mask and masked image, and (b) BrushNet, which introduces an inpainting branch that accepts the inpainting conditioning. In BrushNet, the inpainting conditioning is the same as the SD-Inpainting's input. Trans-Adapter enables these pipelines to process transparent images by incorporating trainable cross-domain self-attention, spatial alignment modules, and alpha map LoRA.

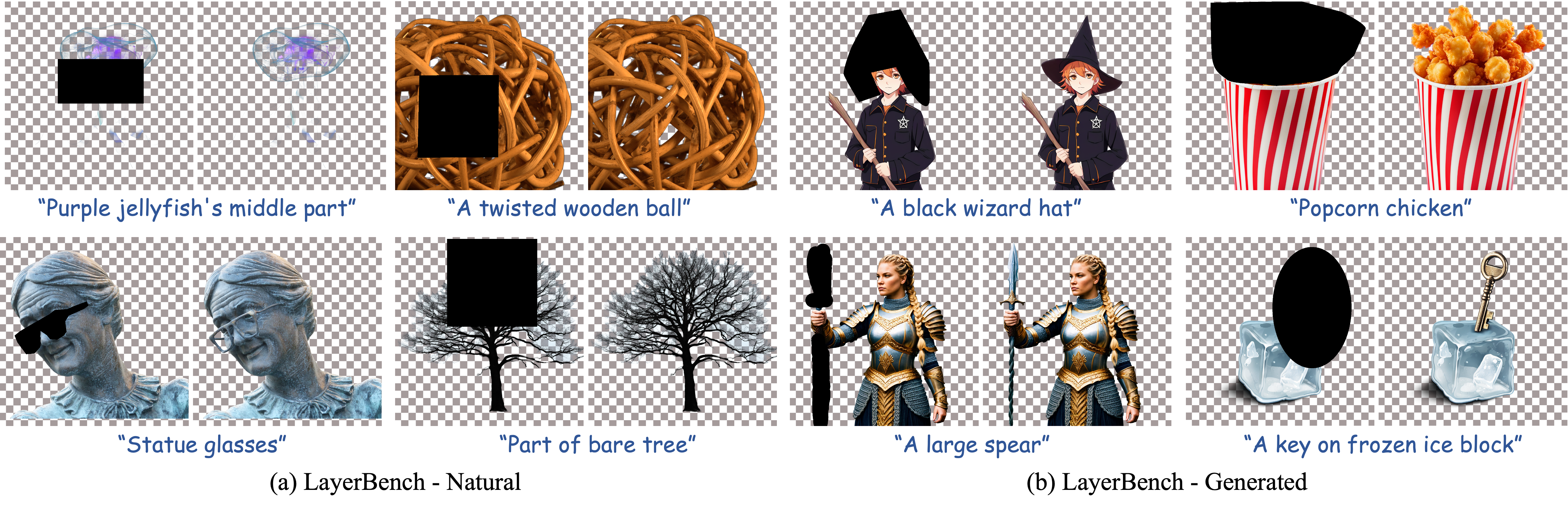

We introduce a new benchmark, LayerBench, which comprises masked images paired with their corresponding text prompts (mask-simple). To comprehensively evaluate our method's inpainting capabilities on both natural and generated images, LayerBench is divided into two subsets: LayerBench-Natural and LayerBench-Generated, each containing 400 images. The LayerBench-Natural subset features real-world images collected from online PNGstocks and matting datasets. For LayerBench-Generated, 200 images with high AestheticScore are selected from the MAGICK dataset, while the remaining 200 images are generated using LayerDiffusion applied to SDXL and the community model RealVisXL-V4.0. Our benchmark presents a variety of challenging scenarios, including images with intricate alpha channel structures, transparent objects, and diverse artistic styles.

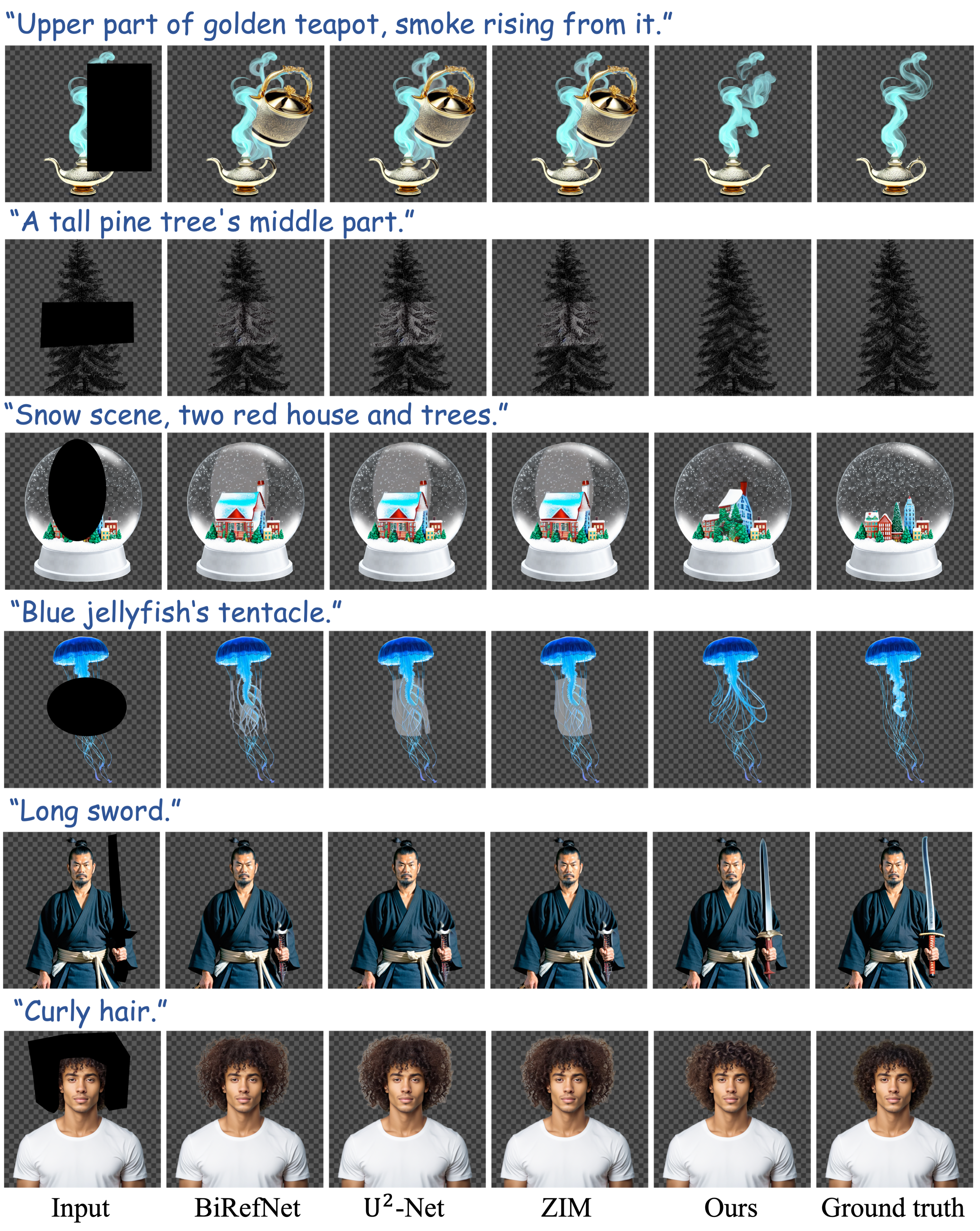

We compare our Trans-Adapter on transparent image inpainting using SD-Inpainting framework with different image matting and image matting and dichotomous image

segmentation methods. Our approach simultaneously generates both the alpha map and RGB, it performs well even for complex alpha details and transparent objects.

@article{transadapter2025,

title = {Trans-Adapter: A Plug-and-Play Framework for Transparent Image Inpainting},

author = {Dai, Yuekun and Li, Haitian and Zhou, Shangchen and Loy, Chen Change},

journal = {ICCV},

year = {2025},

}